SALZBURG GLOBAL

Culture, Arts and Society

Creating Futures: Art and AI for Tomorrow's Narratives

In a world brimming with polarization, inequity and complexity, understanding and shaping our future is more crucial than ever. Artists and cultural practitioners are crucial in pushing the boundaries of how we understand ourselves and the world around us. They help us to move beyond the familiar, transcend borders between the present and the future, and encourage exploration into realms that seem improbable.

From May 6 to 10, Salzburg Global hosted its annual Culture, Arts and Society program. With the theme Creating Futures: Art and AI for Tomorrow's Narratives, this program brought together 50 participants from around the world, including artists, technologists, futurists, curators, activists, and other leaders in their field. This publication is a collection of pieces meant to capture some of the captivating discussions that took place during the week.

DAY 1

Co-creating a Fairer Future for All in AI and Art

By Luba Elliott

Artificial intelligence (AI) has been having an increasing impact across industries over the past decade. Prompt-based tools such as ChatGPT and Stable Diffusion enable us to produce new texts and images quickly and easily from a set of instructions, while technologies such as facial recognition check our faces at passport control, recommendation engines make suggestions on what to buy on Amazon and voice assistants such as Siri and Alexa provide weather forecasts and adjust the music volume.

AI has many different guises, but on the whole, refers to a range of systems designed to mimic human cognitive functions such as reasoning, perception, and interaction with the world around us. Some of the most popular approaches today involve machine learning and reinforcement learning. In machine learning, AI algorithms learn to find patterns in large datasets of millions of images, texts, or sounds and then reproduce them to create new data, whilst reinforcement learning systems rely on rewards to learn optimal behavior in a particular environment.

This rise of these new AI technologies has brought to the forefront a number of concerns regarding how these technologies are developed, trained, and applied across business and society. Issues such as bias in datasets, copyright infringement, job replacement, and governance are debated by policymakers and organizations.

As AI enters day-to-day work practices across sectors, it is crucial that we consider its long-term impact on global society and develop guidelines for fair, transparent, and responsible use of AI before unrepresentative visions, applications, and practices become entrenched.

Looking at the arts and culture sector in particular, the latest generative AI models, such as ChatGPT and DALL-E, are poised to threaten the livelihoods of illustrators, writers, and graphic designers through their ability to produce high-quality images in a variety of artistic styles, coherent essays for all contexts and effective logos and marketing campaigns.

Instead of simplifying the lives of creatives by taking away the administrative tasks of accounting and running a business, AI systems can now complete the creative work we typically enjoy. Worse still, these AI models have frequently trained on datasets of artworks scraped from online art and photography communities, profiting from decades of creative effort without providing financial reward or acknowledgment.

Notwithstanding the current concerns, artists have had a long and fruitful relationship with technology for decades, from Harold Cohen’s art-making computer program AARON and the generative art of Vera Molnar to the current generation of artists such as Mario Klingemann, Sougwen Chung, and Refik Anadol who use AI to construct new understandings of the world, explore the potential of human-machine collaboration and create interactive experiences.

With these new tools come new creative possibilities, and it is vital that all artists are able to explore these in the global arena of multiple cultures and ways of seeing the world. To achieve that, we need to increase the levels of AI literacy and engage new and experienced generations of artists, underrepresented groups, and Indigenous communities so that everyone is able to share their perspectives authentically and make their mark in AI development.

With their ability to work critically with technology, artists play a key role in seeking out AI’s flaws, inaccuracies, and limitations, as well as questioning the applications of technology across society. Artists such as Adam Harvey, Mimi Onuoha, Kate Crawford, and Trevor Paglen explore ways of evading facial recognition, uncovering missing datasets, and highlighting the bias in image recognition systems. As these tools continue to develop and mature at a rapid pace, we need continuous artistic engagement to test out the unexpected use cases of technology and develop inclusive AI systems.

The Salzburg Global Seminar program, “Creating Futures: Art and AI for Tomorrow's Narratives,” co-created from the concerns, questions, and interests of 50 participants from 25 countries, will cover five themes that look at how art and AI can shape a better future for everyone. These will focus on human-AI relations, creative intelligence, ethics, A.I. for all, and Indigenous narratives.

These themes are particularly meaningful for today’s discussions on AI art because they explore the multiple ways of working with AI systems to enhance creativity, build on AI’s unique affordances, and develop new ways of collaboration between humans and machines. The associated ethical challenges, including intellectual property rights, governance models, and broader societal implications of generative AI, will also be addressed. Finally, the program will focus on creating more inclusive AI systems that promote cultural diversity and support global narratives, as well as developing strategies for integrating Indigenous voices and challenging colonial legacies.

By exploring these five different strands through the perspectives of artists, researchers, curators, and creative professionals, we plan to develop resources and guidelines that will help stakeholders in the broader AI and art ecosystems include diverse perspectives for the development of a better future for all.

DAY 2

The Future of Creative Expression

and Ethics in AI

By Edison Chung

Artists have always worked with tools, from the paintbrush to the camera; humanity has created visual marvels with technological innovations. With the birth of artificial intelligence (AI), the inseparable link between artists and tools has never been more palpable and challenging. What is true creative expression, when tech billionaires control the tools that express creativity? What will the future of AI be, when it is built on the injustices of the past?

For many, AI has an almost mystical quality, but as Fellows peel this virtual onion, they remind us that it is, despite all its magic-like capabilities, purely a tool. More specifically, as journalist and writer Akihiko Mori puts it, it is a tool “forged in capitalism”. Ultimately, AI is built on global inequalities, and understanding AI outside of this political economy context is akin to drawing in the sand. As AI continues to develop, it was clear to the Fellows that these systemic power imbalances will determine the future of artistic expression.

Power structures are invisible but pervasive. In the development of AI, power comes from the ability to exclusively mine, select, and store data and the ability to set the narrative. Who is the creator, the artist or Big Tech? These profound questions of authorship demand a pluralistic approach that is missing from the current discourse on AI. Mona Gamil, an Egypt-based artist, elaborates, “We need to disrupt the positive branding of AI that is dictated by Big Tech and rewrite the narrative.” Indeed, Big Tech has constructed a narrative around AI safety, while the more pressing matter for the public is AI ethics. Jun Fei, Professor of Art and Technology at the Central Academy of Fine Arts, speaks of “value alignment” and the importance of diversity and inclusivity in setting the new principles and standards of AI. These principles empower marginalized groups, indigenous cultures, and impoverished communities. More specifically linked to art, Fellows raised concerns over the value of the “human touch,” as artist and painter Phaan Howng reflects, “How can we teach a machine the physicality of artistic labor? The unpredictability of art?” Incorporating the ineffable human experience into language-based models is another incompatibility left out in the AI discourse, reiterating Fellow’s call for broader inclusivity.

The discussion also drilled into the roots of power imbalances, or as Oscar Ekponimo, Founder and CEO of Gallery of Code in Nigeria, referred to as the “hierarchy of influence,” more explicitly, “capitalistic influences.” Capitalistic constructs are engrained in and communicated through our everyday structures. One example is copyright laws, which intrinsically link art, and thereby creative expression, to trade agreements. For Micaela Mantegna, a lawyer and activist in digital ethics, copyright laws exclude other forms of labor and funnel power into a selected few. As technology outpaces regulation, what was intended to protect creativity and artists has transgressed into an instrument of restraint, “Copyrights shrink the public domain,” said Michaela, “It excludes artists working with AI when the digital space is not scarce, and the digital world does not need to be scarce.”

Copyrights are just one iteration of a competition-based system that pits individuals and companies against each other. Fellows believed resetting these incentives across all domains would be a crucial determinant of an equitable and inclusive future. In the development of AI, Fellows pointed to realigning intentions and crafting principles that would foster collaboration. Octavio Kulesz, philosopher and digital publisher, shared a UNESCO framework that could guide countries to a culturally diverse and human-centric perspective on AI. While the framework could potentially steer countries toward self-determination in AI development, a more radical redistribution of powers and wealth was also explored. When suggesting alternatives to copyright, Micaela proposed taxation and universal basic income to rebalance the scales and compensate all labor in the creative process.

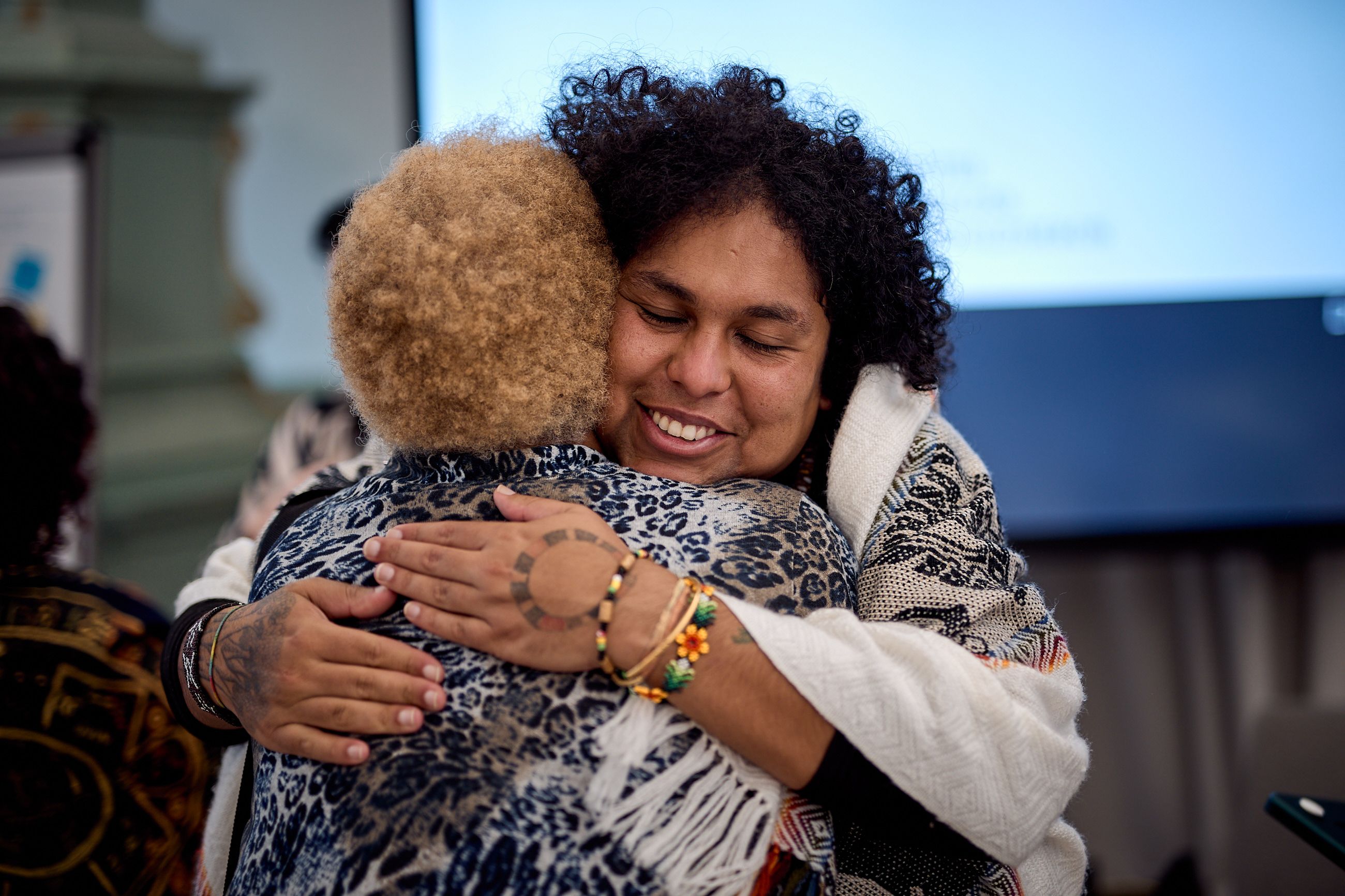

Although discussions have often posed more questions than answers, one thing is certain: the importance of honest and open conversations. Through their discussions, Fellows remind and show us that connection, compassion, and community have always been the core of human experience, and at this critical juncture, returning to what makes us truly human could well decide our collective future.

Can AI Be Responsible?

By Júlia Escrivà Moreno

Mutale Nkonde was born in Zambia, but grew up in the United Kingdom. Living in New York City, she runs a nonprofit called AI for the People, which works towards advancing policies that reduce algorithmic bias.

Júlia Escrivà Moreno, Communications Intern, Salzburg Global: How would you explain Algorithmic Bias to someone on the street?

Mutale Nkonde: Algorithmic bias is a situation where the racism that people experience in their everyday lives is expressed by machines. So, for example, there is this idea that all black people look alike and that we can't be told apart. That's a racist assumption. But facial recognition, which is an AI system that uses computer vision, also cannot recognize black faces. So, it's this idea that in normal life, people who do not want to see the individuality in black people, that becomes part of the way the system operates.

JM: Could there be a future where DEI experts are involved with the creation of AI models or AI policymaking?

MN: I certainly see that future... There's a very famous paper that was published... called Annotators with Attitude... These scientists found that in the data sets that were being built to identify toxic speech on social media, Black speech, African American Vernacular English (AAVE), was more often tagged as toxic when it wasn't; it was just normal speech. Things like "such and such was the bomb" in AAVE means that it's good, but it was tagged as a threat. "Something is fire," which means in AAVE that something is cool or hip, was tagged as arson, whereas racist speech, such as likening somebody to a monkey, wasn't tagged as toxic... Even in development, these ideas of racial bias are integrated by the people tagging the data.

I think where people of color really come in is first doing that research. People that do that research tend to be the people impacted by it. Second of all, by working with policy groups, research groups like ours, to bring that to the fore. So that's actually an example that we're going to be using in a race and AI paper that we're doing for the UN Human Rights Council, because they're trying to understand how this happens from a design perspective. How do we create a policy intervention? But it’s also something that we can take to U.S. Congress as well as companies. And I think the people that are doing this critical work are the people that are most impacted. And our role is to hire hopefully some of those people and empower them to build amazing careers outside of us.

JM: What are some ways that we can make AI models more inclusive? Who should be in charge?

MN: I think everybody needs to do it... I'm often in situations where I'm told black people don't work in AI, black women don't work in AI. And that's both a racist and sexist assumption. I just don't think enough black people work, and I don't think enough women work. And I think it's all our responsibility to make sure that our teams look like the rest of humanity.

JM: How do you look into the future? Do you see a more inclusive and less discriminatory AI or the opposite?

MN: I think we see the AI that we advocate for, and if our voices are not in these policy spaces, then we are going to see the AI of the people around the table who have traditionally been white, older, and who will think of things like inclusive technology as being "woke" when an inclusive technology is an effective technology. And if you cannot effectively design for the future that we're in, then you're just playing. I also think that we have the future that we create, and we get the future we deserve. In every moment, we should be involved in that. And I would like to think, in some small way, I'm creating a more inclusive future for AI in other technologies.

JM: Is responsible AI possible?

MN: Responsible AI is a goal. I think if we have capitalistic goals for AI, it can never be responsible. However, there are alternative ways of looking at capitalism. One of the people I really admire is Joseph Stiglitz, who is a Nobel Prize-winning economist... And he always says that there can be a capitalism in which workers are protected, in which non-discrimination is goal, in which the impact of industry and commerce. In this case, I can have pro-social applications and can be regulated. And he calls that responsible capitalism. And I think if we have responsible capitalism, then we can have responsible AI.

JM: Have the discussions you've been a part of during the program given you any further ideas for your work?

MN: For sure. I hosted a discussion that was looking at protest, confidentiality and privacy, and the storage of video data... The group I was with... made me think of the ways of using encrypted networks like Signal, for example, in the United States, as a way to transmit information in moments of protest, because I think those of us that have been involved in the Palestinian encampments, I was part of the first one at Columbia, we now have all of this video data that's very politically sensitive, and we can't put it on our university servers because we were protesting at the university. I really appreciated being in a group, for example, with somebody who'd been involved in the civil rights protest and had faced very similar questions, and I would never have had that otherwise.

JM: Can you share a takeaway from this week?

MN: I think the questions that are being raised in AI futures are the questions that we've been raising about humanity. And I think that this is a good inflection point to really lean into these issues of representation, diversity, and inclusion, which are all conversations about power. Who has power? Who doesn't have power? Where is that power distributed? And it's exciting to be so central to those discussions because I certainly stand for the redistribution of power and the rewriting of history. And it's been interesting to do that work at Salzburg Global because I think so much of bringing critical people in, like me, to a space that has such a deep history of oppression is also creating a new power and reclaiming that space.

To hear more from Mutale, click the video below:

DAY 3

Beyond Colonial Codes: AI for a Multiverse of Indigenous Futures

By Júlia Escrivà Moreno

During the session “Beyond Colonial Codes: AI for a Multiverse of Indigenous Futures", Fellows explored the intersection of technology and Indigenous sovereignty. Dr. Tiara Roxanne (Purépecha Mestiza) transnational decolonial AI scholar and artist, and other Indigenous artists and activists discussed AI's transformative potential in amplifying Indigenous narratives and confronting colonial legacies. Tiara shared part of their work, Digital Attunement, which they have been developing in recent years and will deeply explore in their forthcoming book.

Maya Chacaby, a professor at York University, highlighted colonialism's profound impact on Indigenous communities. She has been working on the development of an Indigenous Metaverse called "Biskaabiiyaang," a digital realm where people can immerse themselves in her Indigenous culture, interact with Elders, and embark on quests to learn about the Anishinaabe culture. Through this platform, she aims to rewrite the narrative of Indigenous peoples and empower youth to reconnect with their cultural heritage and language.

Central to this resurgence is the urgent need for the revitalization of Indigenous languages, such as her own Anishinaabemowin, which faces the threat of extinction with only approximately 10,000 speakers remaining worldwide, while many more learning it as a second language. Maya emphasized the importance of decolonizing education and storytelling, creating spaces where traditional knowledge can thrive outside of Western frameworks, thereby instilling a sense of responsibility in the audience.

Violeta Ayala, award-winning filmmaker and creative technologist, echoed Maya’s sentiments, emphasizing the resilience and diversity of Indigenous cultures. She urged for open discussions and knowledge sharing, highlighting the Quechua community's thriving culture as an example of strength in the face of colonialism. Violeta cited the ancient proverb, “Until the jaguars tell their own story, the story of the hunt will always glorify the hunter.” During her intervention, she referred to the ongoing conflict in Gaza, emphasizing that “there are no indigenous futures without a free Palestine.”

Conceptual artist Şerife Wong brought attention to the darker side of technological advancement, highlighting the exploitation and environmental impact of AI infrastructure. She cautioned against the concentration of power in the hands of big tech companies, noting the disproportionate harm inflicted on marginalized communities, particularly in the Global South.

Şerife emphasized the importance of bottom-up AI initiatives rooted in Indigenous values. She highlighted the work of Te Hiku Media an Indigenous media hub in Aotearoa that created and manage their own large language model as an example of an Indigenous-led data sovereignty project that ensures the technological, economic and political decisions and benefits of AI stay within the community. She also challenged the prevailing narrative of technology as a force for connectivity, highlighting its role in perpetuating disconnection and exploitation.

The artist and researcher Walla Capelobo offered a unique perspective on the relationship between AI and Indigenous knowledge, drawing parallels between AI and the wisdom of plants. She advocated for a holistic approach to technology rooted in Indigenous principles of sustainability and reciprocity through the lens of decolonization. Kira Xonorika, interdisciplinary artist, author, and futurist, emphasized the importance of understanding history and challenging colonial binaries in envisioning the future of AI. She urged for a more nuanced approach to technology that acknowledges Indigenous temporalities and values.

Some of these Indigenous artists worked together during the program to revise Salzburg Global’s DEI report. They presented their thoughts, suggestions, and recommendations for Indigenous self-determination and the detaching from institutions and ideologies of colonization. Tiara Roxanne presented their collective ideas and expectations, advising Salzburg Global to engage with Indigenous artists truthfully.

The session underscored AI's potential as a tool for Indigenous sovereignty and knowledge transmission, offering a beacon of hope for the future. It also highlighted the need for ethical and culturally sensitive approaches to technology. By centering Indigenous voices and values, we can challenge the colonial legacies that exist in the AI sector and continuously work towards a more equitable and inclusive future for all.

Diverging Perspectives:

AI and the Arts

The use of AI in artistic expression is a polarising concept. We asked Malik Afegbua, storyteller and creative technologist, for whom AI is an integral part of his art, and Sherry Wong, conceptual artist who tackles societal impacts and issues of belief and bias in AI, what their take is.

By Aurore Heugas

Şerife (Sherry) Wong

Aurore Heugas, Communications Specialist, Salzburg Global: What concerns do you have about the potential risks, associated with AI and its increasing presence in the arts world?

Şerife (Sherry) Wong, artist: So my main concern with the proliferation of AI tools at the moment is how it concentrates power in the hands of a few. AI technologies take a lot of computer power and money, it doesn't actually belong to that many diverse group of people. It’s just primarily a few companies, and that concentrates power. And when you collect and incentivize data collection at that scale, with all the AI hype going on, what you're going to get is power over in this direction. That gives the ability to companies only in like the United States pretty much, to have power over others because data helps make things more legible. It's a way of surveilling people to collect their data as well. And when you have that knowledge and you have the computers that can see and compartmentalize and make decisions, you're basically leading to authoritarianism.

AH: Your work often relates to the issues of belief and bias in AI. How do you see those manifesting within artistic creation?

SW: There is nothing new about AI. It seems new, but the technology itself is rooted in the 1950s. The math part of it is really from the 1980s, the big data stuff we've been doing whenever we've had all these computer chips for the past 20 years. It's the money part that's sort of new. And that data that AI is dependent on, this big data, it's our data. So it's very reflective of who we have been as people. And that means that it is full of bias, it is full of our racism. Our good parts, our bad parts, poor decisions that other people have made. So when you use an AI tool, that stuff comes up… If you're using an image maker and you ask it for images of a doctor, it is more likely to give you an image of a white man. Now they have filters on it so it doesn't do it as often, but the underneath embedded stuff is just us. It's the internet, and the internet is not reflective of the world. It's just the internet. And it's primarily a certain viewpoint from people of privilege. It's whiter, it's more male… So it replicates itself and invisibly can affect all the output and work that comes out of it. What I think you're going to see, because of the use of ChatGPT and all these art tools, the more popular they get, the more content will be out there, and it'll just be, subtly, a lot more mediocre and racist and sexist and biased against disabled people and like hurtful and biased against the poor, and all sorts of those kinds of issues are going to be more dominant.

AH: What do you think about the potential for a lot of art to become homogenous. How do you see this impacting the diversity and richness of artistic creation?

SW: The way that people are using the tools are going to be in this homogeneous, neutralizing way because you're just replicating kitsch, and what is the average of all the arts, so there's a certain look to what it is. It takes creativity and an artist using these tools to make it cooler. I'm not as worried about it because artists are creative… They’re not going to be using the tools in that way. They try to break the tool. They try to make it expose itself. They play with it…

I am concerned about the use of these tools that takes away jobs from people. It’s not jobs like mine, because the kind of art I do is not … art that you can replace. I do conceptual, weird art. But for example, in Hollywood and the special effects industry… the people who are basically changing the color of a car from yellow to red, from scene to scene over and over again, those are outsourced jobs that are already less paid, already less valued and less respected. Those ones are going to be impacted. And we already know from the writers’ group that their jobs have been impacted too. People are looking for less freelance work. They're using these tools instead of hiring a writer. So the quality of work has gone down for the kinds of things that people never really wanted to pay much for in the first place.

AH: How do you see the dialog around the risks and weaknesses of AI in the arts evolving in the coming years? How do you see the relationship between artists and AI evolve?

SW: Right now there's a lot of narratives around the relationship of the arts to AI or the artist to AI. And the big thing to know about the narratives is that they're driven by fear. And that's what I see in the news all the time. The narratives are also ones of competition, like AI versus the human, artist versus artists. And one thing I keep hearing is like “AI is not coming for your job. Someone who uses AI is coming for your job, you better get with it”… And that's also just not true. It's a story. And we're telling all these stories because it markets the tools, and it's a choice.

People don't need to use AI to make art. They've never had to. I even saw this one post (that) was saying how AI has made it possible for anyone to make art now. What child hasn't made art?Anyone with a crayon has been able to always be a creator. So the shifting of the dialog that's necessary, the relationship that's missing or that needs to get worked on is a changing of a narrative…That there’s no competition, we don't need to be incentivized to use these tools, and people who do want to use them can use them in creative ways, but that they should be skeptical online…

Once you have a little bit of education, I think the narrative will eventually just shift because artists are smart and they're creative and they're very good at breaking the tools too. And when you break things, you expose exactly how they work and you expose all the problems in them and it will just fall apart. So I have a lot of hope that artists are going to be the ones who are leading in this space for other members of the public to learn about these tools and have their own stories that are generated by people and not being told by a big tech company.

Malik Afegbua

Aurore Heugas, Communications Specialist, Salzburg Global: How did you first become interested in incorporating AI in your art?

Malik Afegbua, CEO of Slickcity Media: It was mostly for research on how to use AI for my traditional storytelling. I'm a filmmaker as well, so I shoot TV commercials, films, etc.. So I used to use that for storyboarding, for visualization. But I also realized that it's powerful enough for you to create things that don't exist. So I started to experiment with that. And that's how I use it now.

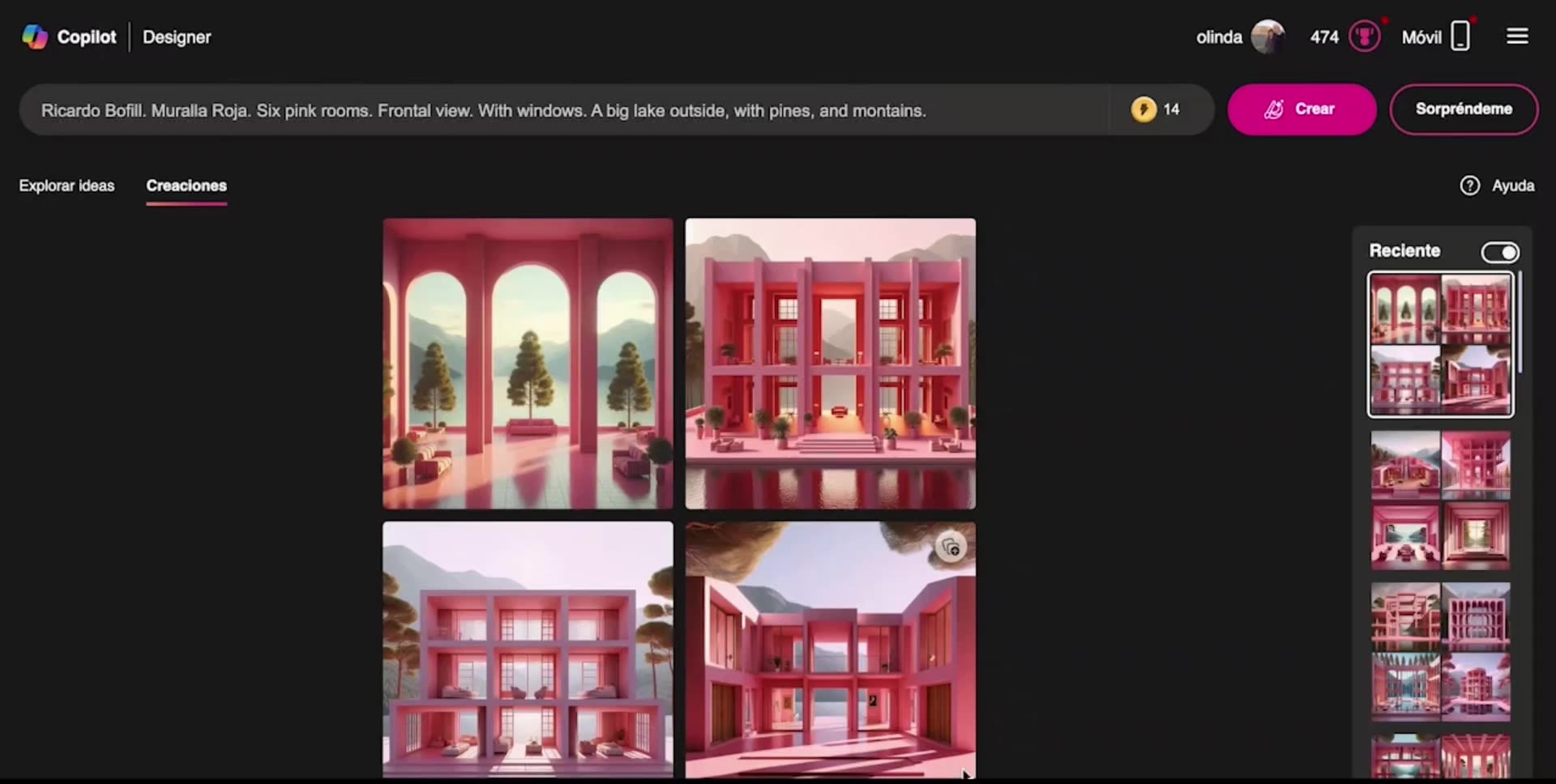

AH: Can you share a little bit more about what kind of creative process goes into working with with AI?

MA: I look at AI as a tool, so it's mostly co-creation. I don't let it dictate the final result for me. So it's either I am mostly trying to be inspired by it or try to speed up my workflow or try to create more variations of things. But what I do is, I work with other softwares as well, like Photoshop, Illustrator and all of that, just to create the actual things I want to do. I'm very particular about the ethics of it. I make sure everything that I work with is my content, so that means that I could copyright it. That means that I could sell it or work for big brands, which I have been doing already.

AH: Your project, The Elder series, is very innovative and challenges ageism. What inspired you to start it?

MA: It was, first of all, from a personal space. You know, I love my mom so much. She wasn't feeling well, she was on life support. That was something that I was not used to, so I wanted to create her memories with AI, her demographic community, not in a suppressed state, but in a more elegant (one). I felt like I could use AI to do that. But it was a shocker for me because I realized that the AI was biased, so I literally had to train it to make it understand what we really look like. Not the biased image from the internet

AH: Can you talk more about AI bias and what you've noticed?

MA: The bias in AI is based off of what it's trained on. And if you look at inception of photography in Africa, it's mostly based on the colonial masters, showing the progress of Africa… And if you check out the pictures back then, most of the pictures were taken (with) Africa as a subject, or to show how powerful they were in comparison to how suppressed Africans were. The problem about that is, that is what stock imagery and AI is being trained on, alongside every other false narratives from the media… So that problem meant that AI, you know, understands how to filter was right or was wrong. So that meant that I had to do something about it…I had to showcase that, using the data that I had, and train it and make variations of those to come out with something different.

AH: What kind of potential do you see for AI to revolutionize arts?

MA: I look at AI as a blank canvas. First of all, I don't think it's intelligent. I think it's an assistance, because at the end of the day, you have to be experienced enough to create something unique from it, or else you'll be creating something very generic, so that means there's a blank canvas. If you're a creator or not, you have to be a part of it, to train it, to have the right types of data sets. So that could be used for the right types of solutions, or for the right types of stories, or for just envisioning and reimagining the future or the past, what it should be, you know? So that is something that I feel is profound because of the fact that you could alter it for the right reasons and create mind blowing things from that.

To hear more from Malik and Şerife, click the video below:

AI, Art, and Creativity: Exploring the Artist’s Perspective

By Luba Elliott

Art and technological developments have always gone hand in hand, with every new invention of color pigment, drawing tool, and image capture mechanisms leading to new aesthetics and artistic practices. Early computer-generated art experiments began in the 1960s, with artists such as Vera Molnar, Ernest Edmonds, and Harold Cohen testing the creative potential of the machine and developing programs capable of producing drawings autonomously. Recent advancements in machine learning and neural networks have significantly expanded AI's creative capabilities, with tools like DeepDream, GANs, and Midjourney opening up a range of artistic styles, collaboration, and co-creation opportunities.

The Salzburg Global program on "Creating Futures: Art and AI for Tomorrow's Narratives" brought together artists, filmmakers, and creative professionals from all over the world working with AI in a multitude of capacities. A selection of these creative practices and approaches is detailed below.

Amy Karle, an American artist exploring the implications of technology on humanity, has been working with AI and generative design since 2015—the beginning of the deep learning revolution that gradually brought artistic practices with AI to the forefront of contemporary culture. For Amy, AI is a key partner in the creative process that complements our human skills. She stated, "The synergy between an artist's intuition, creativity, and critical thinking combined with AI's analytical capabilities opens up new realms of possibility, prototyping, and creativity. This results in art that can be richer and more nuanced, and not possible to create in any other way. AI's potential may seem limitless, but it is our human imagination that gives it direction and purpose. By combining AI's capabilities with our critical thinking and creative vision, we can explore new frontiers and redefine the future."

Paraguayan artist Kira Xonorika explores AI's capacity for world-building through the colorful characters inhabiting imaginary worlds, as seen on her Instagram page. The artist commented: "I use AI because it expands my creative potential, but I'm also interested in the cross-pollination processes that occur with the machine. The strength of symbiosis in co-creation. It's different from other tools in the sense that it allows one to visualize a reflection of oneself and also actively create worlds. I'm interested in the potential of worldbuilding with AI."

As a film director and photographer, Kenyan artist Barbara Khaliyesa Minishi began working with AI in 2022 during the post-production of her short film "Inheritance" (2023). Her experience with AI tools has allowed Barbara to delve deeper into her narratives and expand the visual and thematic elements of her films, demonstrating how technology can complement traditional storytelling methods. After using the AI creative suite Runway, Barbara stated, "From then on, I realized the value of AI tools for indie filmmakers. Nonetheless, my view is both open and yet discerning. I still absolutely value and enjoy the 'analog' type of art such as film photography and will continue doing so. My approach to AI is... how can AI tools be an ally in helping me articulate ideas and solutions that can allow me additional space for play and experimentation as a film director."

Meanwhile, Eddie Wong, a Malaysian artist and filmmaker, commented: "In essence, all AI art is about AI—it reflects back on the technology itself, its potentials, and its limitations." His work includes the film "Portrait of the Jungle People" (2022), which uses text-to-image-based AI tools to generate images for the film, exploring the story of the disappearance of his grandfather and the memories of his closest relatives. Eddie’s perspective underlines the critical aspects of the technology, suggesting that AI not only serves as a creative tool but also as a medium for questioning the nature of technology and its impact on human life and memory. Through his film, Eddie engages audiences in a dialogue about the intersection of technology and humanity, using AI-generated images to evoke emotions and thoughts that resonate deeply with viewers.

Finally, Egypt-based digital and performance artist Mona Gamil, author of the short film "AI Confessions" (2023) about the impact of AI on film and video, noted that "AI is a matter of fact—as an idea, as your new job competition, as a specter or bogeyman. If you're into tech art, it's a natural progression, and equally natural to feel anxious about it." Mona acknowledges the dual nature of AI, balancing excitement with caution. By exploring the tensions and anxieties surrounding AI, Mona’s art serves as a critical examination of the technological landscape, encouraging viewers to reflect on the broader societal implications of these advancements.

The integration of AI into the creative process at the brainstorming, creation, and post-production stages is reshaping the nature of artistic expression. Artists like Kira Xonorika, Barbara Khaliyesa Minishi, Eddie Wong, Amy Karle, and Mona Gamil are at the forefront of this transformation, each working with AI as a collaborator, partner, or tool to probe its creative potential. Their practices demonstrate that while AI offers unprecedented opportunities for exploration, it also raises important questions about authorship, value, and the future of artistic labor.

As we move forward, the art community needs to engage in critical discourse around AI and creativity, ensuring that this powerful technology is harnessed responsibly. By embracing thoughtful, responsible, and inclusive approaches, artists can use AI to expand their creative horizons, explore new aesthetic possibilities, and redefine the future of art. This ongoing dialogue and experimentation will be crucial in shaping an art world where human ingenuity and AI coexist, driving forward the evolution of artistic practices in ways that honor both tradition and innovation. Through this reflective engagement with AI, artists can continue to create impactful work that pushes the boundaries of art as we know it.

AI Literacy: The Foundation of a Brighter AI Future

By Luba Elliott

Artificial Intelligence (AI) is a transformative force reshaping numerous sectors, including arts, creative industries, and many office jobs. As AI becomes increasingly pervasive, it is crucial for everyone - artists, creative professionals, and the general public - to grasp its fundamentals, implications, and nuances. By understanding how these systems are built, evaluated, and deployed, we will be able to use them more effectively and responsibly.

During the Salzburg Global program on "Creating Futures: Art and AI for Tomorrow's Narratives", AI literacy was identified as one of the key areas for future development. Fellows of the program, including activist Micaela Mantegna and artists Phaan Howng and Eddie Wong, contributed to the discussions and the creation of the group’s AI Literacy Curriculum.

Phaan Howng is an American-born Taiwanese artist making paintings, immersive installations, and performances on the theme of Earth’s landscape in a post-human future. From her perspective, “AI is ableist against those who are computer or technology illiterate...and don't care”. For Phaan, there is a need to acknowledge that AI is driven by humans: “The ‘information and knowledge’ supplied to AI platforms is populated with text, images, data, as uploaded by humans with access to internet, software, social media and media platforms. To be ‘AI literate’ is to equip individuals with the knowledge, skills, and ethical framework to recognize this situation, and that those who use AI should proceed with mindfulness–such as checking facts, biases, and sources.”

Phaan emphasized the necessity of prioritizing ethics: “Given the significant human influence on AI, and vice versa AI’s influence on humans, it is important to place a strong emphasis on ethics. We need to think critically about the backend of AI development, considering factors such as who is designing the platforms, who owns the datasets, who develops the business models, and who has the right to train and control AI models.” To achieve this, Phaan advocates for a public service announcement (PSA): “It's imperative that a huge PSA about AI has to be made aware to the general public, especially now that big tech companies are pushing it on us by integrating it into their software, apps, and search engines without our understanding of AI and our consent. I think this PSA has to come from the government and not from the tech companies themselves and be taught in schools.”

Meanwhile, Eddie Wong, a Malaysian artist and filmmaker, commented on the specific requirements for artists: "AI literacy for artists isn't just about mastering new tools; it involves a multifaceted understanding of the technical, philosophical, artistic, and ethical dimensions of machine learning. ML models are not static tools but dynamic systems that create emergent outputs, constantly evolving based on the data they process, often leading to unexpected results. Dataset provenance is a crucial factor, including who designs the platforms, who owns the datasets, who develops the business models, and who has the right to train and control AI models. To achieve AI literacy, artists should grasp the technical aspects of AI, such as machine learning and neural networks, and critically engage with its ethical and societal implications.”

Eddie’s perspective emphasizes the importance of artists engaging with AI on multiple levels. They must understand not just how to use AI tools, but also the underlying mechanics, data provenance, and ethical considerations of these technologies. This comprehensive approach will allow artists to leverage AI creatively while highlighting its limitations and being mindful of its broader impacts.

As for the impact of AI on artists themselves, there have been a number of projects over the past few months that deal with companies training AI systems on artists’ works, typically without their consent. Research projects such as Nightshade allow artists to “poison” their data so that it causes AI models to behave in unpredictable ways, while initiatives by artists such as Holly Herndon’s Spawning AI and Ed Newton-Rex’s Fairly Trained offer solutions for both artists and AI developers to declare and respect data preferences.

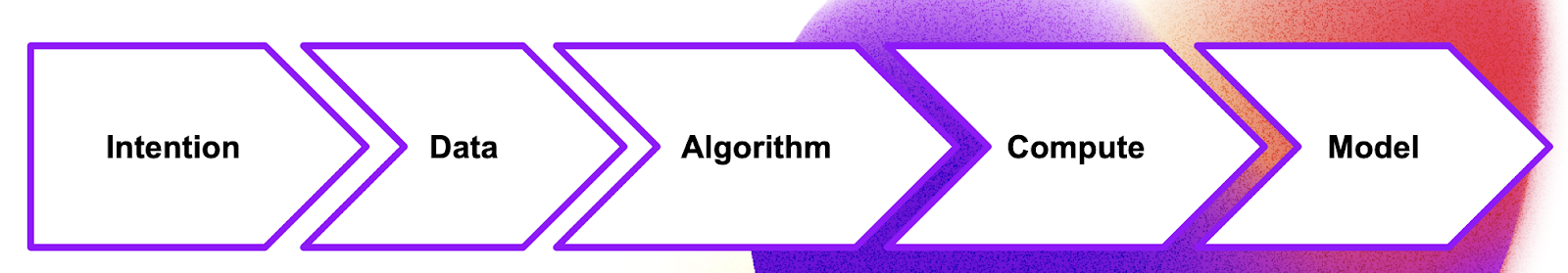

However, this is not the only type of intellectual labor that powers the AI systems of today. In her article “ARTificial: Why Copyright Is Not the Right Policy Tool to Deal with Generative AI” for the Yale Law Journal, Micaela Mantegna, a video games lawyer and activist known as the “Abogamer”, looks at the types of labor involved in the AI development process and the different forms of recognition they receive. She states that “OpenAI has acknowledged that models would be irrelevant or ineffective without training on copyrighted materials. If so, the same is true about ‘ghost work’ - the contributions of data workers globally are indispensable to fine-tune and sanitize AI models. Recognizing one form of intellectual labor within the copyright framework while neglecting to acknowledge equally relevant contributions of other types of labor results in an unfair distinction that privileges one type of worker over another.”

Micaela highlighted the need for fair treatment of all contributors to AI systems: "Intellectual labor is particularly evident in the context of the labor inputs used in the development of generative AI (GAI) models, where both creative works and other types of more pragmatic intellectual labor are intertwined in the training process. Privileging creative intellectual labor over data-classification labor creates an unfair hierarchy within the AI industry and further stigmatizes ghost workers. Unlike copyright, policy interventions would allow regulators to design a uniform approach that recognizes and compensates all contributors alike.”

As emphasized by Phaan, Eddie, and Micaela, AI literacy is a crucial element for the future advancement of our society and a key pillar in ensuring that AI systems are developed in a way that takes everyone’s needs and contributions into account. AI literacy is not just about mastering technical tools, but also about understanding the ethical, societal, and labor implications of AI. By incorporating these insights, we can build a more comprehensive and equitable approach to AI literacy, benefiting individuals and society as a whole.

AI Literacy Curriculum

By Angela Utibe Peters, Stefan Brandt, Phaan Howng, Mariano Sardon, Eddie Wong, Elena Said, Aibe Elukpo, Nolan Dennis, Serena Marija, Guy De lancey, Kiley Arroyo, Jun Fei, and Erwin Maas

We think AI literacy is a core competency for navigating futures through practical engagement with tools that are available and imagining tools that are not yet available. We need to consider all ethical guidelines and be inclusive but not prohibitive while keeping the space open for artistic freedom.

What is AI literacy?

AI literacy refers to the critical understanding and knowledge of artificial intelligence (AI) concepts, principles, and applications from diverse, inclusive, intercultural perspectives. It involves comprehending how AI technologies work, their impact on society (environment, cultural, political, economic, social, historical, spiritual practices, etc.), and how to use them responsibly with basic competencies and skill

Categories of AI literacy

AI literacy can be categorized into several aspects

1. Conceptual Understanding: This includes knowledge of fundamental AI concepts such as:

- Machine Learning: Teaching a computer to paint like Van Gogh by showing it his works, so it can create its own machine-generated masterpieces.

- Neural Networks: A vast, interconnected web of nodes that process information together, like an artist's mind weaving colors, shapes, and meanings.

- Deep Learning: Mastering the principles of art itself, with each layer of the neural network gaining a deeper grasp of features, from basic forms to intricate details.

- Natural Language Processing (NLP): Giving computers the ability to interpret and generate human language, like a paintbrush that responds to voice commands.

- Computer Vision: Endowing AI with the eyes of an art critic, able to analyze composition, color, and style, and even create or identify forgeries.

2. Technical Skills: Proficiency in using AI tools and platforms for tasks like:

Level 1: Entry/Basic Level

- Knowledge of consumer AI tools

- Prompting

- Basics in combining multiple applications

- Integrating into your workflow

3. Ethical Considerations: Awareness of ethical issues surrounding AI, including bias, fairness, transparency, privacy, accountability, authorship, copyright, plagiarism, theft, data, labor extraction, ghost work, opacity, automation, culture gatekeeping, and who profits.

4. Societal Impact: Understanding the societal implications of AI on areas like employment, healthcare, education, governance, environment, cultural, intergenerational, political, economic, social, historical, and spiritual practices.

5. Critical Thinking: Ability to evaluate AI applications, claims, and potential biases critically and the ability to hold ambiguity and paradoxes.

6. Creative Applications: Applying AI techniques to manage efficiencies, enhance everyday processes, eliminate repetitive tasks, save time, and as an ideation tool, including understanding the limitations and constraints of AI systems.

7. Communication: Comfortable talking about AI-related topics.

8. Continuous Learning and Evaluation: Keeping up with the latest developments and emerging trends to develop literacy skills and continuously reassess the use-value of AI.

Empowering Artists: AI Literacy Workshops / Modules

Module 1: Introduction to AI for Artists

Further readings/references

- https://aipedagogy.org/guide/

- http://manovich.net/content/04-projects/107-defining-ai-arts-three-proposals/manovich.defining-ai-arts.2019.pdf

Module 2: Ethics and Bias in AI Art

Further readings/references

- Refer to Publication of Ethical Guidelines (group)

- Refer to Intercultural AI Development Toolkit (group)

- https://mitpress.mit.edu/9780262537018/artificial-unintelligence/

- https://atrium.ai/resources/ethical-ai-real-world-examples-of-bias-and-how-to-combat-it/

- AI generated images are biased, showing the world through stereotypes - Washington Post

- An MIT Technology Review Series: AI Colonialism

- https://researchvalues2018.wordpress.com/2017/12/20/tega-brain-the-environment-is-not-a-system/

- https://www.versobooks.com/products/735-the-eye-of-the-master

Module 3: AI Tools and Techniques for Artistic Creation

Further reading/references

- https://majusculelit.com/machine-marionettes/

- https://beta.dreamstudio.ai/generate

- https://midjourney.co/

- https://www.technologyreview.com/2022/04/22/1050394/artificial-intelligence-for-the-people/

- https://pharmapsychotic.com/tools.html

- Text to Image:

- Midjourney

- Stable Diffusion

- https://beta.dreamstudio.ai/generate

- RunwayML

- LLM (Large Language Model):

- ChatGPT

- Claude 3

- https://aipedagogy.org/guide/tutorial/

Module 4: Application of AI-driven Interactivity and Collaboration

Further reading/references:

- George Legrady - “Pockets Full of Memory (2001)” - to understand the basics of neural network concepts, such as classification of data, through an interactive piece

- Tega Brain https://tegabrain.com/artwork

- Anne Bogart’s ‘The Viewpoints Book’

- https://stilluntitledproject.wordpress.com/wp-content/uploads/2014/11/anne-bogart-and-tina-landau-the-viewpoints-book.pdf

- William Forsythe’s "Improvisation Technologies"

Module 5: Showcase and Reflection

Further reading/references:

Throughout the curriculum, emphasis will be placed on hands-on learning, group discussions, and peer collaboration to foster a supportive and creative learning environment for artists and cultural practitioners to explore and harness the potential of AI in their artistic practice.

People talking about AI (Aibe Elukpo and Elena Said with Runway)

People talking about AI (Aibe Elukpo and Elena Said with Runway)

Principles for Ethical AI Use in Art and Cultural Practices:

The following principles are intended to increase awareness but not limit artistic expression & creative freedom

1. Promote Intercultural, Intersectional, and Interspecies Justice

- Be accountable to these aims at multiple levels (e.g. epistemological to distribution) to ensure AI-generated artworks and projects reflect diverse perspectives and avoid reinforcing stereotypes or biases based on race, gender, ethnicity, or other characteristics.

- Seek out and maintain a diverse web of trusting relationships (e.g. people to people, people to AI, people to planet).

- Reduce barriers to participation, particularly at the margins.

- Understand the diverse worldviews and both implicit and explicit biases that we bring to the use of all tools.

- Protect and promote diverse intelligences, cultural identities, abilities, imaginations, and cultural logics.

2. Power and Empathy

Create space for all peoples to express their power by using AI to amplify the voices, stories, and experiences of diverse communities and individuals. Foster empathy and mutual understanding through AI-driven engagement that promotes social cohesion, care, and intercultural, intergenerational, interdisciplinary, and interspecies dialogue.

3. Transparency and Attribution

- Proactively acknowledge your source materials.

- As appropriate, obtain free, prior, and informed consent.

- Cultivate trust as a precondition for building a safe environment for this work. For example, label the art piece with “AI-Generated…” as if it were a medium, like acrylic paint, marble, found footage, etc.

- Support the sharing of resources and data sovereignty.

4. Ecological and Social Justice

Using AI technologies is resource-intensive, allowing colonial patterns of harm to persist. These harms include intensive extraction of natural resources, exploitation of marginalized communities, and inequitable accumulation of wealth. Understanding these impacts will provide you the ability to make conscious choices that reduce these effects.

5. Digital technologies and Their Impacts

These are continually evolving in context- and culture-specific ways. Therefore, it is necessary to stay in tune with emerging developments while also considering those who do not use this tool and the implications for your artistic practice.

By adhering to these principles, artists and cultural practitioners can harness the transformative potential, of AI while upholding social, ethical, and cultural awareness in their artistic endeavours.

In conclusion,

We need to recognize that AI is essentially human-driven. The “information and knowledge” supplied to AI platforms is populated with text, images, data, opinions, etc., uploaded by humans with access to internet/software/social media/media platforms—and that to be “AI literate” is to [equip individuals with the knowledge, skills and ethical framework] to recognize this situation, and that those who use AI should proceed with mindfulness–such as fact/bias/source checking, etc.

Given the significant human influence on AI, and vice versa AI’s influence on humans, it is important to place a strong emphasis on ethics. We need to think critically about the backend of AI development, considering factors such as who is designing the platforms, who owns the datasets, who develops the business models, and who has the right to train and control AI models.

* This is a first draft/framework of a living curriculum document that was co-written with ChatGPT. It can be edited, added to, and changed. As a next step, refinements need to be applied with regards to schedule, further readings, exercises & assignments.

This is about artists, creative expression, and thinking creatively about social arrangements. The enhancement of human creativity and imaginations in many realms.

“We prompt therefore we are……perhaps”

Bias in AI (Aibe Elukpo and Elena Said with DALL-E)

Bias in AI (Aibe Elukpo and Elena Said with DALL-E)

Artistic Image of Neural Networks, Aibe Elukpo and Elena Said with DALL-E

Artistic Image of Neural Networks, Aibe Elukpo and Elena Said with DALL-E

An artist prompting, Aibe Elukpo and Elena Said with DALL-E

An artist prompting, Aibe Elukpo and Elena Said with DALL-E

Ethics in AI, Aibe Elukpo and Elena Said with DALL-E

Ethics in AI, Aibe Elukpo and Elena Said with DALL-E

People talking about AI, Aibe Elukpo and Elena Said with Runway

People talking about AI, Aibe Elukpo and Elena Said with Runway

Non-Western Perspectives on AI:

Art Ecosystems in Africa, Americas, and Asia

Written by Luba Elliott

Artificial Intelligence (AI) has become a central topic in global discussions about technology, ethics, and the future of human society. While much of the discourse is dominated by Western perspectives, it is crucial to include voices from diverse communities worldwide to ensure a global vision for AI. Artists from Africa, Latin America, Asia, and Indigenous communities broaden the conversation, challenging us to think differently about the role of AI in our lives.

Diversity was a key feature of the Salzburg Global program on "Creating Futures: Art and AI for Tomorrow's Narratives", with Fellows hailing from 25 countries and a plethora of organizations and artistic and creative practices. It was a rare opportunity to meet so many creative practitioners from all over the world in one place. Below, some of the Fellows describe their projects and local AI art ecosystems.

Doreen A. Ríos, director of the online digital art platform [ANTI]MATERIA in Mexico City, described the AI scene in Mexico as “twofold, one rooted in what I like calling ‘machine-whispering’ which encompasses practices such as that of Canek Zapata. The other one is older and has to do with the mythologies of non-human collaborators. Both always looking into the commons, both founded on replicable and shareable strategies. The second one of course can be seen in the work of Malitzin Cortés or Anni Garza Lau.” Zapata looks at automated writing models and visual internet languages, while Cortés operates as a creative technologist, working between live coding, live cinema, and installation. Garza Lau, a transdisciplinary artist and programmer, explores the use and effects of technological devices in everyday life.

Over in Nigeria, Oscar Ekponimo is the founder & CEO of Gallery of Code, described as “Africa's first transdisciplinary design lab at merging Arts, Science, and Technology to address Africa's challenges”. When asked about the local ecosystem, Oscar commented, “The AI in Art scene in Nigeria is thriving with a lot of creativity; the first AI in Art Summit in Nigeria held in 2018 was organized by Gallery of Code and Ars Electronica. Since then, we have had artists like Malik Afegbua who created 'The Elders Series' - an AI-generated fashion show for the elderly, and visual artist Jibril Baba, an artist in residence at Gallery of Code incorporating AI for his art-driven innovation called ‘RECALL’ - a modular storage prototype that uses AI to monitor food spoilage of roots and tubers.” The visual artist Malik Afegbua’s "The Elders Series" gained worldwide press coverage for challenging stereotypes with its portrayal of stylish older people on the fashion runway, while the innovator Jibril Baba’s "Recall: Spirit of the Old", a possible solution to the food and water issues in North-eastern Nigeria, was supported by The S+T+ARTS Residency.

Based in Canada, Maya Chacaby is Anishinaabe, Beaver Clan from Kaministiquia (Thunder Bay). A Professor at York University, Maya created a story to teach her students Anishinaabemowin (the Ojibwe language) and realized the potential for making the resources available online to anyone eager to learn the language. Thus the Biskaabiiyaang project was born, which combines traditional Anishinaabe storytelling with metaverse technologies. Set in a post-apocalyptic world invaded by linguicidals, the immersive virtual game invites players to save the language from extinction by learning from nature and the Elders and exploring the ruins. This project is part of the UNESCO International Decade of Indigenous Languages (2022 – 2032) and will be continuously developed to improve the skills of second-language learners. Upon completion, it will act as a blueprint for endangered Indigenous languages.

Meanwhile, June K, an artist known for her phygital installations with red thread, works between LA and South Korea. She sees the Korean AI art scene as a vibrant space, where “the development of Korean-based AI language models by companies like Naver has democratized access to AI technologies, empowering artists of all backgrounds to explore new creative frontiers”. Alongside established institutions such as the Art Center Nabi, which has supported and documented the work of early Korean artists working with technology, newer spots are cropping up such as The Uncommon Gallery in Gangnam. Founded by Kim Min Hyun of AI Network, it is the first web3 gallery in Korea dedicated to promoting AI art, attracting significant footfall and attention for a plethora of artists working within the digital, web3, NFTs, and AI-related art forms.

June was also keen to highlight her experience of working with the generative AI tool Midjourney as a Korean: “In its early stages, Midjourney struggled with accurately representing Asian faces, often producing inaccurate results for Korean or Asian faces. Users also encountered challenges with Midjourney's representation of traditional Korean costumes and mythical creatures from Korean folklore, indicating the need for a better understanding of cultural context. While Midjourney has made progress, there is still room for improvement in accurately representing Asian faces, traditional attire, and cultural concepts. Incorporating more detailed data and understanding cultural nuances will enhance Midjourney's accuracy and cultural sensitivity.”

These perspectives from Mexico, South Korea, Nigeria, and Anishinaabe, Beaver Clan broaden our understanding of some of the communities working and thinking about AI globally, challenging dominant Western narratives and proposing alternative visions of what AI can and should be. They emphasize the importance of grassroots community efforts, the need for preservation of cultural heritage, and the necessity of developing AI models that accurately represent all global cultures.

To truly harness the potential of AI, it is essential to engage with and learn from these diverse perspectives. This means creating platforms for artists from different regions to share their work, fostering cross-cultural collaborations, and ensuring that AI development is guided by a plurality of voices. Only by doing so can we build a future where AI serves the needs and aspirations of all humanity, rather than a select few. As we move forward, it is imperative that we continue to listen to and amplify non-Western voices, ensuring that AI evolves in ways that are equitable, sustainable, and truly reflective of our global society.

The Charter for AI Ethics

Written by Glen Calleja, Mona Gamil, Martin Inthamoussu, Amy Karle, Monica Lopez, Akihiko Mori, and Stephanie Meisl

1. Preamble

The advent of Artificial Intelligence (AI) has presented distinct opportunities and challenges for the future of societies. This charter seeks to establish a reference framework of ethical principles addressing issues related to the development, adoption, and application of AI technologies within the Cultural and Creative Sectors (CCS) and beyond.

The document was created at the Salzburg Global Seminar program on “Creating Futures: Art and AI for Tomorrow’s Narratives” as an initial concept piece and framework for further interviews, investigation, research, and development. This is intended to be an introductory document including additional ethical considerations that arose during the program.

The authors of this charter hail from different socio-political and economic realities including Austria, Argentina, Egypt, Ireland, Japan, Malta, Mexico, the United States, and Uruguay. It represents a collaborative effort among artists, technologists, philosophers, ethicists, writers, and policymakers to address the emerging challenges at the intersection of AI, creative and critical thinking, the arts, culture, and human rights.

The mission of this charter is to elevate both critical and creative approaches without compromising the delicate balance of life on Earth. Whether human or non-human, all species deserve thriving conditions, and this charter endeavors to ensure that AI becomes a catalyst for vibrant cultural expression while safeguarding the rights of those who wish to opt out of AI’s integration into multiple fields.

The influence of AI is revolutionary, shaping both tangible and digital landscapes alike. This demands a resilient ethical framework to navigate its use in sensitive, high-stakes domains, ranging from the intricate world of the arts to other far-reaching spheres of impact.

The working definition of the term “artificial intelligence” used for the drafting of this document is that used by the Organization for Economic Co-operation and Development (OECD); “an AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.”

2. Core Principles

Traditional as well as current and significant emerging ethical AI frameworks, such as UNESCO's Recommendation on the Ethics of Artificial Intelligence, the EU AI Act, the United Nations resolution on the promotion of “safe, secure, and trustworthy” artificial intelligence (AI) systems, and the US Blueprint for an AI Bill of Rights have addressed many of the complexities and potential risks associated with AI, such as cultural plurality, economic variety, and ecological inclusivity.

Building on these foundational frameworks and the insights gained at Salzburg Global Seminar, we aim to underscore ethical principles tailored to the current and future possible risks of AI, to better safeguard against potential harms, promote transparency, and ensure that AI systems genuinely align with the various nuances of societal values as the technology continues to evolve.

1. Do No Harm

This principle emphasizes the development and deployment of AI systems that prioritize human and living systems’ safety, well-being, and fairness, ensuring they operate securely, transparently, and without infringing on privacy, safety, or perpetuating bias. This includes preventing the development and proliferation of AI that is designed, implicitly or explicitly, to propagate bias or misinformation/disinformation, and regulate AI research for compliance with ethical standards. It mandates proactive measures to safeguard against potential risks, including robust testing, respecting psychological integrity, and preventing unintended consequences to ensure AI acts as a beneficial tool for living systems, ecosystems, cultures, and society.

2. Governance

This principle ensures the responsible development and application of AI technologies by promoting equitable relationships via fair and transparent processes such that the interests of all players, irrespective of their degree of engagement with said technologies, are protected, and their rights to access real and perceived benefits are guaranteed. This can be achieved through the adoption of a comprehensive framework that regulates access to AI literacy and development with a focus on equity between stakeholders including future generations.

The following are some key principles and considerations. Their order does not reflect any assumed hierarchy of priority.

- Ensure responsible development and application of AI by promoting equitable relationships and transparent processes across the AI value chain.

- Protect rights and ensure fair access to benefits for all stakeholders, irrespective of their engagement level.

- Foster fairness by preventing biases and appreciating cultural uniqueness.

- Maintain decision-making transparency and hold developers accountable for AI outcomes.

- Respect intellectual property; uphold the privacy of users’ data, including but not limited to neural rights.

- Promote decentralized power structures to prevent monopolies and ensure a fair distribution of benefits.

- Implement opt-in and opt-out mechanisms to ensure user autonomy in AI technologies. Individuals or groups must explicitly opt into AI services, providing clear, informed consent before AI is activated, applied, or used. They should also have easy, effective means to opt out at any time without penalties or losing access to essential non-AI services while maintaining fairness and accessibility. Transparency about AI operations, data usage, and potential impacts is crucial. AI systems must respect user preferences, promptly excluding their data upon opting out, and ensuring previously collected data is deleted or anonymized.

3. Diversity

This principle seeks to mitigate the risks of uniformity in decision-making processes and AI outputs, as well as the assumed conformity arising from AI use, proliferation, and imposition. It further seeks to respect and promote a rich pluralism across life systems globally.

- Protect human and non-human species and various forms of intelligence.

- Develop AI in a way that respects all life forms and ecological systems they depend on.

- Counteract the risks of uniform AI outputs and decision-making processes that may cause homogeneity by promoting diversity that reflects the human experience.

- Foster inclusivity and address cultural, ethnic, and gender diversity in AI development.

- Ensure AI technologies are accessible and beneficial across diverse societal segments, providing equitable access.

- Integrate diverse perspectives into AI development, including representation, cultural sensitivity, and routine bias checks.

- Implement technical measures to prevent homogenized outputs and ensure AI respects minority voices and cultural diversity and actively contributes to cultural preservation.

- Develop diverse underlying models and architectures in AI.

4. Positive Impacts

The aim is to ensure that AI not only avoids harm but actively contributes to human, ecological, societal, and global well-being, fostering a holistically sustainable and thriving future. Positiveness in AI is defined by its capacity to generate beneficial outcomes that enhance the quality of life and thrive across multiple dimensions and domains. This includes improving human experiences, supporting ecological balance, supporting various cultures and intelligences, advancing societal justice, and promoting global sustainability as well as creative and critical thinking.

3. Opportunities and Challenges

The development and deployment of AI presents unprecedented opportunities, including but not limited to, the advancement of an accelerated analytical tool, efficiency, precision, scalability, innovation, novel forms of expression, enhanced creative processes, and personalization.

Accelerated Analytical Tools: AI technologies offer advanced analytical capabilities that have the potential to enhance the understanding and interpretation of various forms of content, including but not limited to artistic content. Machine learning algorithms can analyze vast amounts of data, identify patterns, and provide valuable insights into user preferences, trends, and cultural influences, allowing professionals to expedite their analytical processes and increase the scale of their work.

Enhanced Precision: Automation technologies excel at executing repetitive tasks with unmatched accuracy, as they are not prone to fatigue or human error. This human-machine collaboration could enable humans to achieve greater precision in their work.

Augmented Creativity: AI has the potential to augment the creative process by leveraging its analytical capabilities to generate novel insights and offer professionals new perspectives and ideas. This human-machine collaborative process has the potential to facilitate innovative approaches to problem-solving and content creation.

AI as a Medium: By integrating AI into their creative process, artists can explore new ways of generating and sketching out ideas, prototyping different aesthetic possibilities, engaging with emergent technologies, and responding to contemporary socio-cultural issues in dynamic and impactful ways.

Increased Personalization: AI has the potential to enhance quality of life by personalizing user experiences through data analysis, tailored content, and dynamic interfaces. Anticipatory design and micro-personalization are among the services that could improve users’ quality of life.

Healthcare and Medical Research: AI can revolutionize healthcare by providing precise diagnostics, personalized treatment plans, and accelerating medical research, leading to better patient outcomes and innovative medical solutions.

Education and Learning: AI can offer personalized learning experiences, adaptive learning platforms, and new educational tools that cater to the diverse needs of students, fostering a more inclusive and effective educational environment.

These advancements, however, also bring forth several challenges:

- The deployment of AI systems may contribute to a concentration of power, with major corporations and countries from the Global North potentially exacerbating the North-South divide.

- The use of AI can be seen as a form of digital colonization, where technologies developed primarily by entities in the Global North may impose their models, values, and priorities on other parts of the world.

- AI-based technologies can lead to market concentration, potentially creating monopolies and barriers that hinder competition and the entry of independent players into the AI ecosystem.

- The use of AI in various industries might accelerate job displacement and loss, raising concerns about the future of employment and the sustainability of workers' livelihoods.

- AI-based technologies may promote homogeneity in cultural outputs, potentially stifling diversity. They may also promote homogeneity of thought and loss of critical and creative thinking.

- AI systems might contribute to cultural and language erasure, as their widespread use can marginalize non-dominant cultures and languages.

- The unmonitored use of cultural data to train AI software can lead to instances of systematic cultural appropriation and abuse unless systems are put in place to counteract it. AI-based technologies can perpetuate biases, stereotypes, and discrimination if not carefully designed, reflecting and reinforcing existing social prejudices.

- AI technologies may facilitate the spread of disinformation and misinformation, potentially eroding democratic processes, undermining trust in institutions, and exacerbating polarization.

- The increasing reliance on AI technologies might lead to dehumanization. Overall, the pervasive integration of AI into daily life and decision-making processes risks reducing human interactions, experiences, and emotions to mere data points, potentially eroding the intrinsic value of human dignity and the richness of the human experience and human intelligence.

- The unconsented use of data extracted from cultural expressions such as paintings, photographs, texts, audios or videos, and biometrics, among others, to train AI systems may pose threats to the moral and material rights of artists.

- The deployment of AI can lead to enhanced surveillance capabilities, raising significant concerns about privacy, surveillance, security, and the potential misuse of personal information.

- It is unclear if and how AI systems making critical decisions in areas such as healthcare, criminal justice, and employment are designed to uphold ethical standards and ensure health and well-being, fairness, accountability, and transparency. These systems may introduce bias and danger to health and well-being without transparency and accountability.

- The energy consumption and environmental footprint of AI technologies, particularly those involving large-scale data centers and computational resources, are immense and may be an existential environmental threat.

- AI systems that do not remain under human control pose significant threats to safety and ethical standards. Unsupervised autonomous behaviors can lead to unpredictable and potentially harmful outcomes, undermining human oversight and accountability.

AI systems that lack transparency and explainability create substantial risks by obscuring how decisions are made.

4. Priority Actions

Priority actions are essential to addressing the current ethical gaps highlighted in this charter. Proactive measures such as the continuous engagement of multiple stakeholders, the holistic implementation of core principles delineated above, and the enforcement of emerging regulations are critical to ensuring AI-enabled technologies are responsibly developed and integrated within society. Without prompt intervention by all, we stand to continue exacerbating socioeconomic inequalities. As such, we propose the following set of priority actions:

Incentivize local production

Fundamental to the designing and development of fair and equitable AI for everyone demands a recognition of local communities and their unique needs and solutions. Specific ways to collaborate include:

- AI awareness and literacy: Enhance understanding of AI technologies and their ethical implications at the community level through educational initiatives.

- Support grassroots initiatives and community-led and owned engagement across the entire AI lifecycle: Foster grassroots participation in AI development to ensure local contexts and needs are addressed.

- Intellectual property of developed products and services: Protect the rights and ownership of locally developed AI innovations to encourage sustainable economic growth.

Safeguard human autonomy and current and emerging social structures

Human autonomy should be prioritized over AI automation. AI-enabled systems should be designed to complement and augment human decision-making capabilities rather than replace them. Moreover, preserving social structures involves mitigating the potential for AI-driven disruptions to power dynamics, the job market, and employment. This entails the following steps:

- Design AI to support, not supplant, human roles: Ensure AI systems enhance human capabilities without rendering human roles obsolete.

- Mitigate AI-driven disruptions: Develop policies and frameworks to address potential job displacement and changes in power dynamics caused by AI deployment.

- Encourage a variety of business models: Ensure that different perspectives and values are incorporated into AI development and integration, leading to more inclusive and ethical outcomes for all.

- Protect the rights of artists and creators whose works have been used to train AI systems.

Mitigate a concentration of power

Develop and enforce regulations and policies that promote decentralized power structures within the AI industry. Encourage a variety of business models including the growth of small and medium-sized enterprises (SMEs) and startups, fostering a competitive and diverse AI ecosystem. This can prevent a few large corporations from monopolizing the industry and ensure that the benefits of AI are widely distributed.

Develop mechanisms for ongoing system monitoring